Bias Testing Playbook

Interview stakeholders, create a customized bias testing process, and publish a customer-facing white paper to promote transparency.

Process

- Stakeholder interviews: Facilitate sessions across product, data science, compliance, and risk to understand the business problems, data, and tech stack.

- Measurement design: Identify protected class inference options, fairness metrics best suited for the AI System, and decision-tree for priority and risk tiers.

- Playbook build: Document step-by-step methods for defining, measuring, and mitigating bias; include sampling, baselines, and thresholds.

- External narrative: Draft a plain-language white paper summarizing approach, findings, and improvements for their customers.

Deliverables

- Bias Testing Playbook (roles, metrics catalog, test plans, reporting templates).

- Model-level bias assessment with prioritized mitigation recommendations.

- Customer-facing white paper (methodology, results, commitments, next steps).

Independent Model Validation — Healthcare Risk Scoring

MRM review of a clinical risk model to verify fit for purpose, performance, and documentation quality.

Process

- Independent Tech Review: Reproduce data pipelines and model training; test assumptions, feature selection, and controls.

- Documentation Review: Evaluate performance metrics, decisions and justifications, and alignment with model documentation best practices.

- Challenger Model: Conduct an impartial model selection and feature selection exercise to test data drift and alternative solutions.

- Final Report: Create a final report of the findings using a template that can be used for future MRM reviews to be completed internally.

Deliverables

- Validation report (methods, results, residual risks, mitigation plans).

- MRM reporting template and framework.

AI Governance Audit — NIST AI RMF Alignment

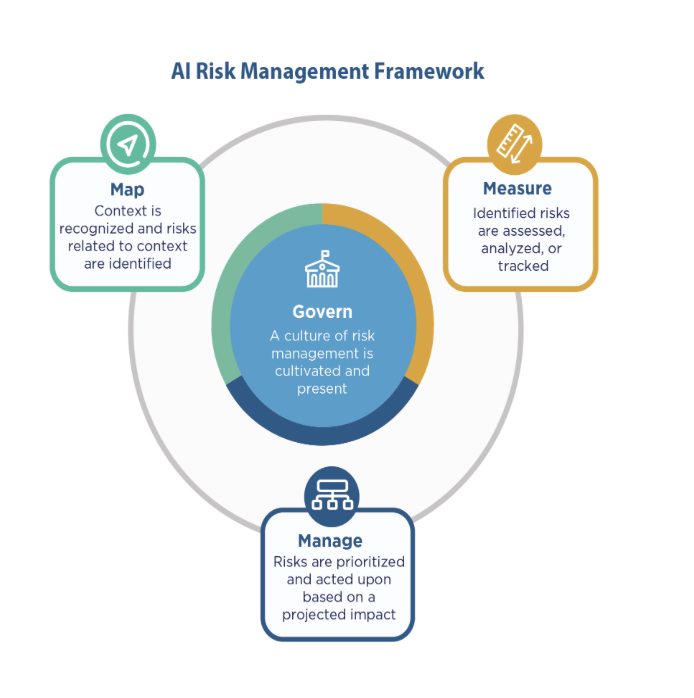

End-to-end audit of policies, roles, and controls mapped to the NIST AI RMF (Govern • Map • Measure • Manage).

Process

- Program review: Evaluate governance structure, roles, policies, and documentation.

- Control testing: Examine lifecycle controls (requirements, testing, monitoring, incident response) against RMF expectations.

- Gap analysis: Identify remediation tasks and model owner.

Deliverables

- Audit report with RMF-mapped findings.

- Policy & procedure updates (templates for approvals, model documentation, bias testing, monitoring).

- Executive briefing deck for boards and regulators including our third-party sign-off.